Confluent Terraform Provider

Firstly, create a free trial account for 30 days by clicking on the link below

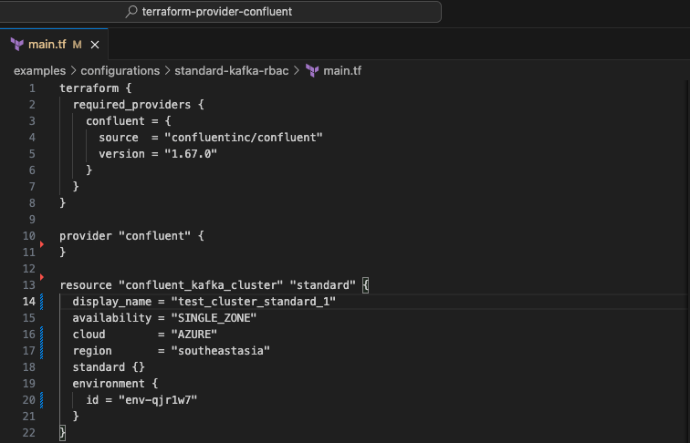

I am using one of pre-built sample configuration in the Confluent Terraform Provider Github Repository.

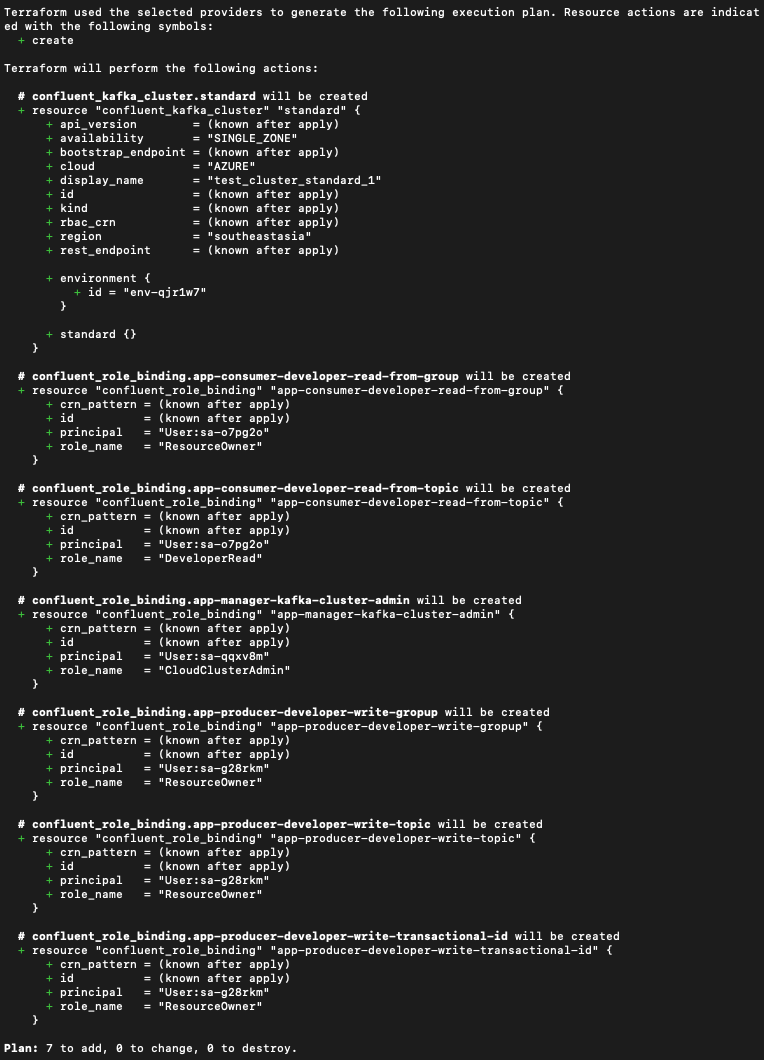

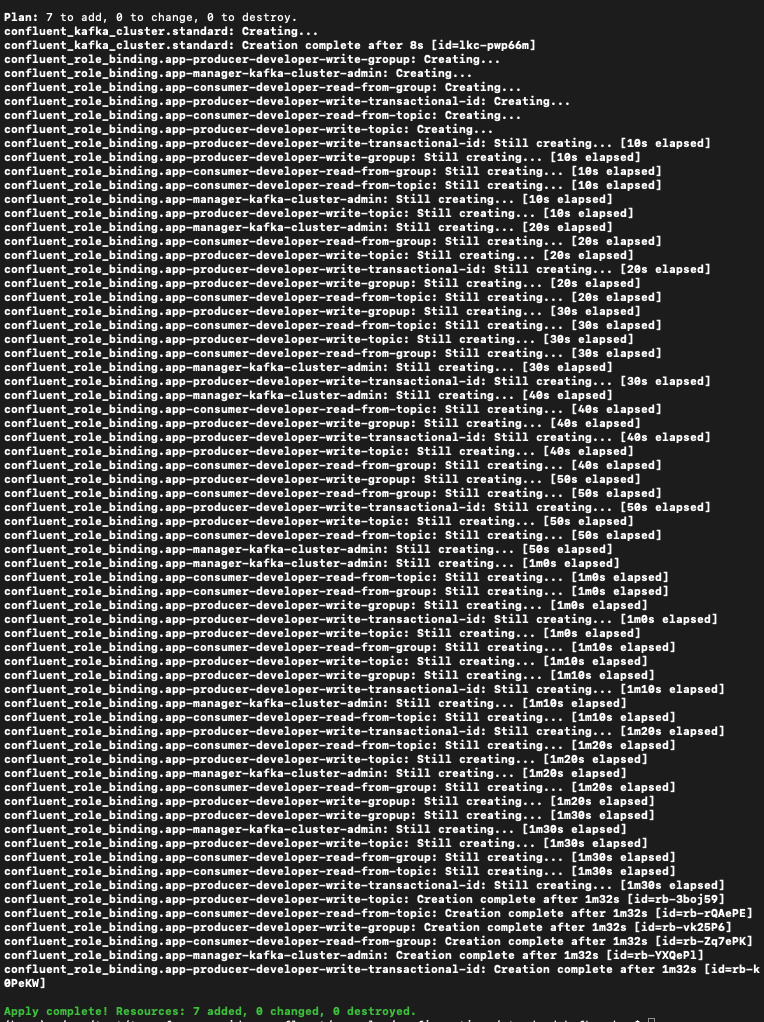

The main.tf file contains Terraform resources that represent the infrastructure I will be building, including a Kafka cluster and 6 RBAC role bindings.

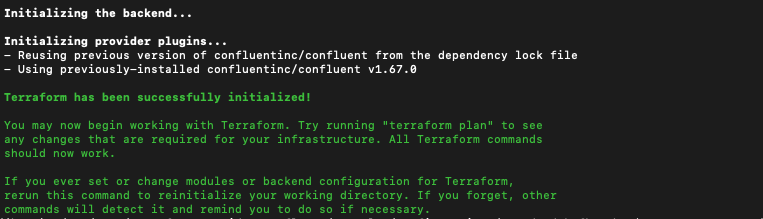

Download and install the provider defined in the configuration with this command

terraform init

Run this command to see a plan of what resources are going to be created

terraform plan

Apply the configuration

terraform apply -auto-approve

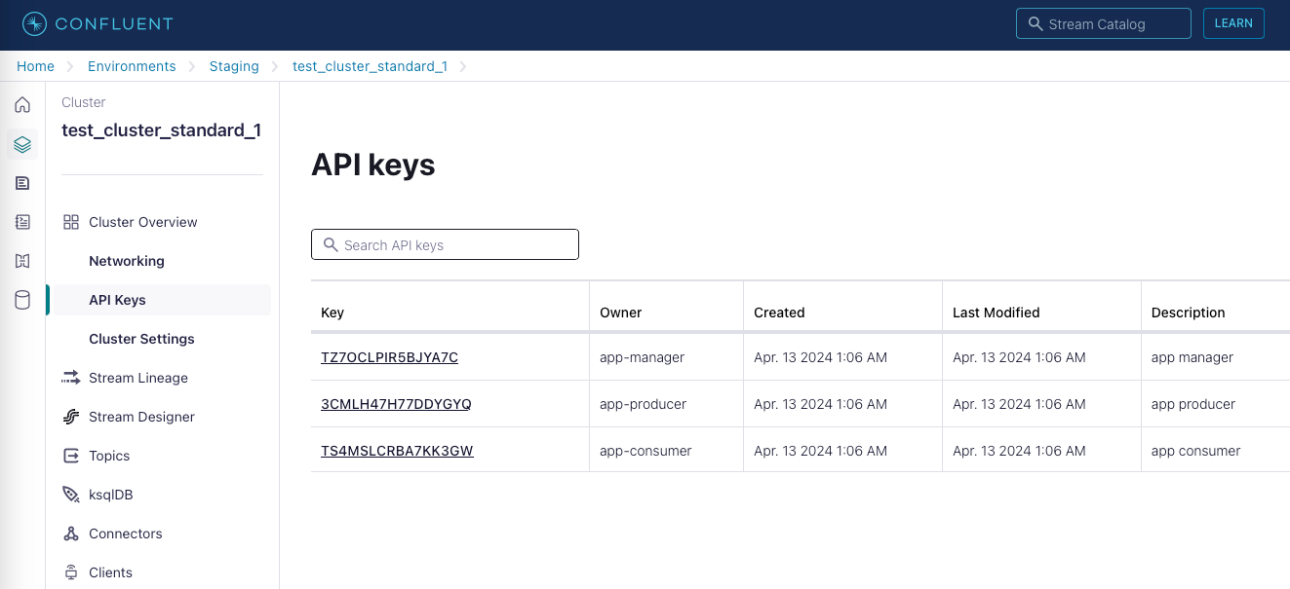

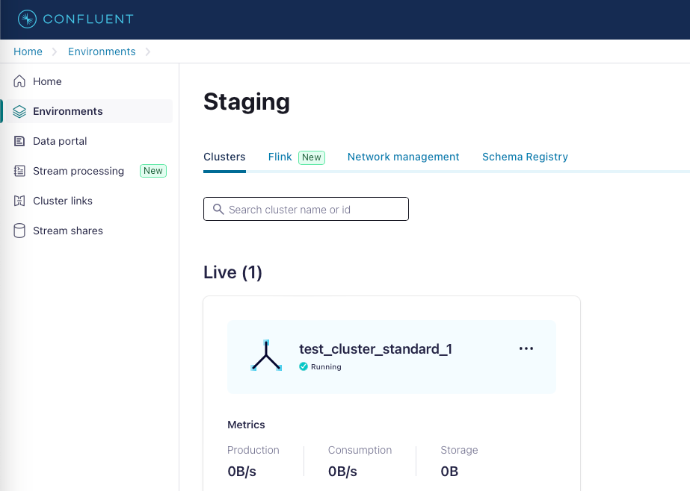

A Kafka cluster has been created in environment Staging

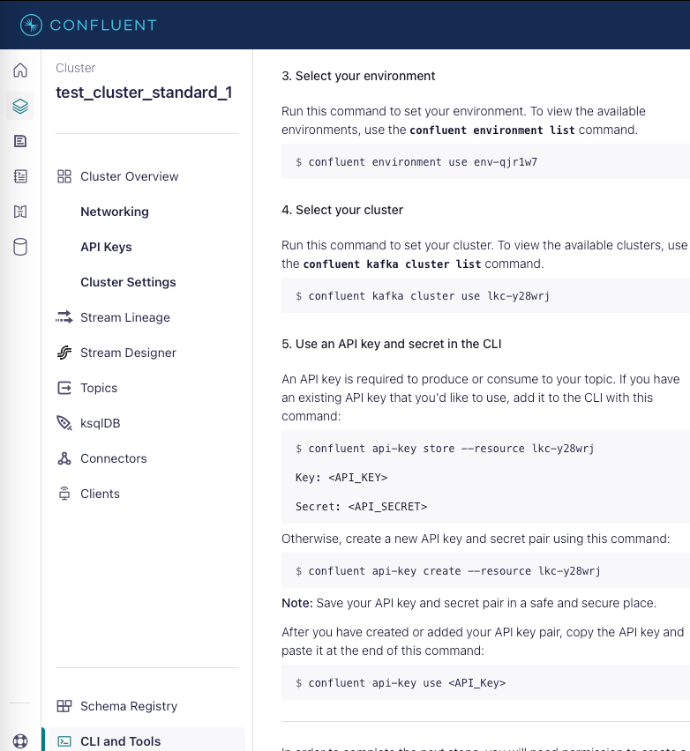

To generate an API Key, navigate to the 'CLI and Tools' section in the cluster menu. Detailed instructions on how to create the API key can be found there.

Next, I will need to run the shell scripts to generate the API keys for the following, so that the API key can be automatically updated for the producers and consumers.

1.Terraform scripts to import the Topics

2.Update the API key for Kafka Connect Deployment yaml

3.Update the API key for Spark Consumer

Get the kafka cluster ID

#!/bin/bashconfluent environment use env-qjr1w7

confluent kafka cluster list > kafka_cluster.txt

cp kafka_cluster.txt ~/terraform-provider-confluent/examples/configurations/kafka-importer/imported_confluent_infrastructure

cluster_id=$(grep "test_cluster_standard_1" kafka_cluster.txt | awk '{print $2}')

Generate the API key

confluent api-key create --resource $kafka_id --service-account sa-qqxv8m --description "app manager" > app-manager-key.txt

confluent api-key create --resource $kafka_id --service-account sa-g28rkm --description "app producer" > app-producer-key.txt

confluent api-key create --resource $kafka_id --service-account sa-o7pg2o --description "app consumer" > app-consumer-key.txt

cp app-manager-key.txt ~/terraform-provider-confluent/examples/configurations/kafka-importer/imported_confluent_infrastructure

Update the key to kafka connect deployment yaml and Spark programs

#!/bin/bash# Replace the Cloud API KEY/Secret and kafka cluster endpoint from Confluent portal

export CONFLUENT_CLOUD_API_KEY="xxxxxxxxxxxxxxxx"

export CONFLUENT_CLOUD_API_SECRET="xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

export KAFKA_ID=$kafka_id

export KAFKA_REST_ENDPOINT="https://pkc-xxxxx.southeastasia.azure.confluent.cloud:443"

# Read the API Key and API Secret values from key.txt file

manager_api_key=$(grep "API Key" app-manager-key.txt | awk '{print $5}')

manager_api_secret=$(grep "API Secret" app-manager-key.txt | awk '{print $5}')

# Export the API Key and API Secret to variables

export manager_key="$manager_api_key"

export manager_secret="$manager_api_secret"

export KAFKA_API_KEY="$manager_api_key"

export KAFKA_API_SECRET="$manager_api_secret"

echo "API Key - manager: $manager_key"

echo "API Secret - manager: $manager_secret"

# Read the API Key and API Secret values from key.txt file

producer_api_key=$(grep "API Key" app-producer-key.txt | awk '{print $5}')

producer_api_secret=$(grep "API Secret" app-producer-key.txt | awk '{print $5}')

# Export the API Key and API Secret to variables

export producer_key="$producer_api_key"

export producer_secret="$producer_api_secret"

echo "API Key - producer: $producer_key"

echo "API Secret - producer: $producer_secret"

# Replace the new key/secret to the Kafka Connect yaml file

sed -e "s/@key/$producer_key/g" -e "s|@secret|$producer_secret|g" < kafka-connect-confluent-deploy-template.yaml > kafka-connect-confluent-deploy.yaml

# Read the API Key and API Secret values from key.txt file

consumer_api_key=$(grep "API Key" app-consumer-key.txt | awk '{print $5}')

consumer_api_secret=$(grep "API Secret" app-consumer-key.txt | awk '{print $5}'

# Export the API Key and API Secret to variables

export consumer_key="$consumer_api_key"

export consumer_secret="$consumer_api_secret"

echo "API Key - consumer: $consumer_key"

echo "API Secret - consumer: $consumer_secret"

# Replace the new key/secret to the consumer spark file

consumer_path="~/app/spark/jobs"

sed -e "s/@key/$consumer_key/g" -e "s|@secret|$consumer_secret|g" < $consumer_path/pyspark_test_aks_confluent_encrypt_template.py > $consumer_path/pyspark_test_aks_confluent_encrypt.py

API keys have been generated for the producer and consumer, as illustrated below.